Introduction

For years I carried a question across every AI and ML project: how do you structure development so it stays production-ready, extensible, optimized, maintainable, and reproducible? After a decade of trial and error, I have converged on five pillars that form a hyper-optimized AI workflow.

How am I supposed to structure and develop my AI and ML projects?

I spent years reading papers, watching tutorials, and experimenting with tooling in search of an answer. Each approach delivered part of the puzzle, but something always felt missing. Only in the last couple of years did the full picture lock into place, and this post is the first step in documenting that playbook.

You can watch a live walk-through of the approach in this Hamilton meetup talk: Hamilton Meetup: Hyper-Optimized AI Workflows.

Throughout this post and the broader series I use AI, ML, and Data Science interchangeably. The principles apply equally to all three.

1 — Metric-Based Optimization

Every project needs explicit metrics that represent success and the constraints you are willing to accept. Those metrics typically fall into three buckets:

- Predictive quality: accuracy, F1, recall, precision

- Cost: dollar spend, FLOPs, model size (MB)

- Performance: training time, inference latency

You can optimize a single north-star metric or blend several signals. Examples include 0.7 * F1 + 0.3 * (1 / latency_ms) or 0.6 * AUC_ROC + 0.2 * (1 / training_hours) + 0.2 * (1 / cloud_cost_usd).

Once your team agrees on the objective and constraints, the workflow becomes a search problem: build, measure, and iterate toward the best feasible configuration.

Andrew Ng has a concise primer on picking a single evaluation metric. It is a great way to align teams before optimization begins.

2 — Interactive Developer Experience

AI development is inherently interactive. Beyond writing code that executes without errors, we constantly inspect data, intermediate artifacts, and model outputs. Tools like Jupyter notebooks excel in this exploratory loop because they let us test APIs, compare implementations, and look at results side by side.

The challenge is keeping that exploration in sync with production code. Treat notebooks as a first-class companion to the codebase: prototype quickly, then upstream working logic into modules, tests, and pipelines so nothing critical stays trapped in ad hoc cells.

Keep notebooks deterministic, runnable top to bottom, and version-controlled. Otherwise reproducibility collapses when someone else tries to run your work.

3 — Production-Ready Code

"Production-ready" varies by organization. It could mean meeting an SLA, passing a reliability threshold, or exposing a stable API. The common denominator is wrapping your workflow behind a serviceable interface and deploying it where other systems or users can reach it.

A retrieval-augmented generation workflow over PDFs might contain dozens of moving parts, yet it can still present a simple API surface:

upload_document(file: PDF) -> document_idquery_document(document_id, query, output_format) -> response

def upload_document(file: bytes) -> str:

"""Persist the document and return a handle for future queries."""

...

def query_document(document_id: str, query: str, output_format: str = "markdown") -> str:

"""Run retrieval + generation and return a formatted answer."""

...

Shipping changes should feel routine: when you fix a bug or add a new configuration, the pipeline to production should be a button press or CI workflow away.

4 — Modular and Extensible Code

Modularity keeps your code open for extension but closed for modification. Each logical step in the workflow should accept interchangeable implementations that share an interface. That way you can drop in new options, compare them against incumbents, and roll back without disrupting the rest of the system.

PIPELINE = {

"retriever": hp.select([

hp.nest("configs/vector_db.py"),

hp.nest("configs/bm25.py"),

]),

"reranker": hp.optional(hp.nest("configs/rerank_cross_encoder.py")),

"llm": hp.nest("configs/llm.py"),

}

This pattern keeps implementations swappable while preserving shared inputs and outputs.

Once enough competing implementations exist, your project enters what I call a "superposition of workflows": you can spin up any specific configuration, benchmark it, and promote winners into production without rewriting core logic.

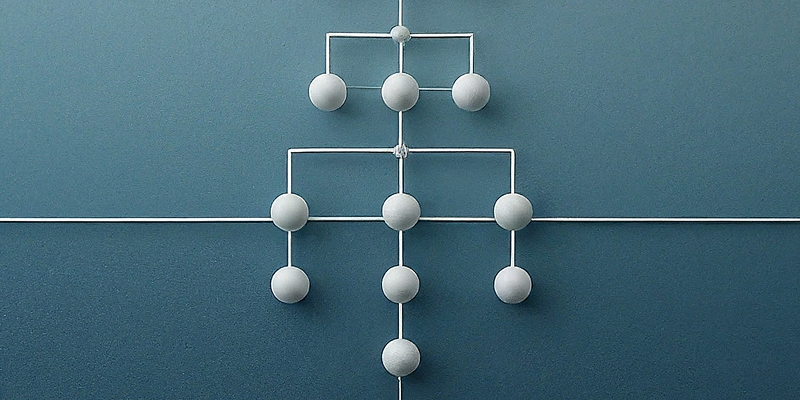

5 — Hierarchical and Visual Structures

Extend modularity beyond individual components to entire sections of the workflow. Treat preprocessing, retrieval, generation, and evaluation as top-level modules with nested sub-components. Visualizing the system this way lets you zoom in on a subsection, improve it in isolation, then plug it back into the larger DAG.

Working hierarchically unlocks several advantages:

- Reduced mental overhead: concentrate on one slice while the rest remains stable.

- Easier collaboration: teammates or AI assistants get clear interfaces for each block.

- Reusability: encapsulated modules travel across projects with minimal changes.

- Self-documentation: diagrams and configuration trees reflect the architecture without diving into source files.

Summary

The five pillars of a hyper-optimized AI workflow:

- Metric-Based Optimization: define measurable objectives and constraints.

- Interactive Developer Experience: pair exploratory tooling with disciplined promotion into the codebase.

- Production-Ready Code: expose workflows through stable APIs and streamline deployments.

- Modular and Extensible Code: swap implementations without rewriting the system.

- Hierarchical and Visual Structures: manage complexity through layered, visualized architecture.

Future posts will dig deeper into each pillar and the tooling that supports them, including the Hamilton framework from DAGWorks, plus my own packages Hypster and HyperNodes.